I think I’m doing it wrong.

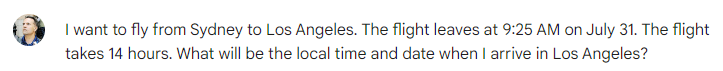

Lately, I’ve been using both Google’s Gemini and ChatGPT for my generative AI needs. I recently posed this question to both:

Gemini told me I would land in Los Angeles at 6:25 PM local time on July 30. ChatGPT told me I would land in Los Angeles at 6:25 AM on July 31.

This is a real scenario, and it kind of matters. I need a separate flight home, and would be helpful to know which day that flight should be. So I did what I usually do when these two tools give me different answers: I said “I asked the same question to a different AI, and it responded with this,” before pasting in the response from the other tool. Then I asked which is correct.

GPT stuck to its guns, though it rationalized it by saying “Los Angeles is not 17 hours behind Sydney; the time difference between Sydney and Los Angeles is typically around 17 hours.” Gemini used the equally troubling response “both responses have some validity, but the second response is the most accurate.” This is not a nuanced question. There is a single correct answer. The flight is scheduled to land at 6:25 AM local time on July 31.

Using generative AI to try to do math is like trying to use a graphing calculator to write an essay. It’s very confident in the wildly misguided solutions it provides. I’ve tried on numerous occasions to use GPT to solve systems of linear equations, and it’s wildly — even comically — bad at it. When I point out the errors, it invariably apologizes before giving me different wrong answers.

I’ve tried to use it for other things, too. It sort of understands how spreadsheets work, but it doesn’t get the syntax right when creating complex formulas. It kind of understands scripting and database queries, but it takes so long to correct the mistakes that it’s usually easier to just do it myself. It is always equally confident and apologetic about its mistakes.

Generative AI is just not good at this stuff. And if you think about what it’s doing behind the scenes, that’s not a huge surprise. These tools create text that plausible for a given situation based on all of the text that the tool has seen before. It can write a recommendation letter that looks like a recommendation letter. It can create a policy document that reads like a policy document. It can do a sixth grade book report. It has seen sixth grade book reports. It should be really good at dissertations. You know, except for the original research part.

It can write a Taylor Swift song. But it can’t invent Taylor Swift’s style.

The more I play with it, the more it looks like a parlor trick. I heard a presentation a few months ago where the presenter claimed that generative AI is good enough to score a “3” on the AP English Language exam. That’s fine. It’s passible, especially for text that nobody is actually going to read. But it’s not good enough for anything that I would want my name attached to.

I want AI tools that have more intelligence than artificiality. I want tools that can answer the questions that we’re not smart enough to ask. I want a tool that actually makes me smarter, rather than just automating all the routine stuff. The past is littered with innovative technologies that we’ve used to do the same old things faster. I want to do different things.

Whatever promise the future may bring for AI tools, they’re not they’re yet.